A Healthy Check-In with F.O.A.K. Reality – A Whitepaper from IPA

By Krista Sutton

It’s no secret that we are big fans of Independent Project Analysis (IPA) here at New Energy Risk. Their whitepaper entitled “The Successful Management of New Technology Projects”, authored by Ed Merrow, is a good example of why. Bringing data driven insights to the murky world of project management is a noble and underappreciated contribution to the ecosystem. In this world littered with spectacular project failures and fraught with advertisements for the newest project management fad promising all manner of miracle, IPA stands apart by bringing real-world, data-backed insights to help project professionals and organizations better develop and execute projects.

In this whitepaper focused on new technology (our favorite subject!), IPA calls out some of the common issues we at NER observe projects hitting as they go through development and execution, and offers some best practices to make sure your project doesn’t get too far over its skis.

#1: Data Is Key

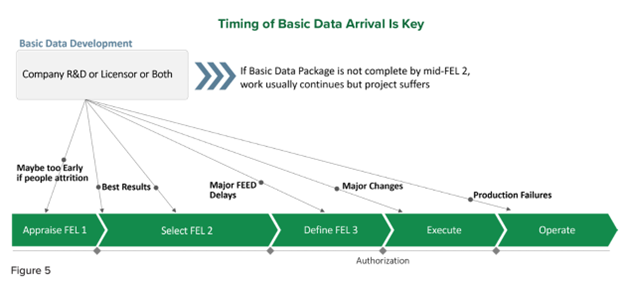

Too many times new technology companies will not have a fully developed basis of design, or it will be changed late in development or even (gasp!) during execution. Part of the basis of design documentation includes the heat and material balance summarizing the expected operating conditions and yields, and the definitions of feedstock & product composition and contaminants. Merrow refers to this as “Basic Data”. The basis of design is the heart of a project. The project has been set up to fail if there are any missing, unreliable or unrealistic data points, or if the Basic Data does not capture expected variability in the process, feedstocks, products or boundary conditions. While you and your team might execute the project flawlessly, if you have an incorrect or underspecified basis of design, you will still produce an asset with operability and reliability problems that underdelivers on project benefits. More often than not this either means investment to fix or, in the worst case, major write downs or even company bankruptcies.

As Merrow points out, project teams consistently overestimate how much Basic Data is available, especially in projects where the extent of technology novelty is not clear. He calls out “integration projects” where a commercially proven technology is being integrated into another commercial process and points out that such projects often underestimate the impact of that integration. IPA is even able to quantify this impact: 2.5% more unknowns in the heat and mass balance, 2.7% project cost growth, 0.5 months of additional startup time, and a 4.7% decrease in production after 7-12 months (based on nameplate design of 100%) [1]. It might sound acceptable but this effect is observed for EACH new integration, and BEFORE accounting for any new technology steps!

In response, it is recommended to pause development work and figure out a way to get the Basic Data required, i.e. through research, piloting, feedstock studies, cold flow studies, or field trials. The best practice that Merrow presents is to establish a “Basic Data Protocol” focusing on what is unknown (rather than a basis of design, which deals with what is known) and a process to obtain this data before heading into development work, to minimize repetition. Paul Szabo authored another relevant article on this topic, “Follow this Process Development Path “ [2], which discusses the benefits of completing a virtual commercial design, and then thoughtfully scaling that design down in a way that allows for all this data to be generated during piloting. The gaps in Basic Data need to be resolved before finalization of the design and the start of front-end engineering.

Merrow points out that a pitfall to be avoided, and one that NER has observed often, is a bias towards addressing data gaps with seemingly conservative design decisions such as extra spares or redundancy. This can give a false sense of security, whereas an empirical validation could have revealed potential causes of failure that exceed the conservative design assumptions or bypass them completely (e.g. unexpected contaminants). There is no guarantee that the right problem has been identified, all the consequences upstream and downstream have been accounted for, or how severe the problem might be at full scale. It can also make the project much more expensive without addressing the risk. It is the equivalent of wearing a helmet to ride a bike and finding yourself on a busy three-lane highway.

The first thing that NER looks for as part of due diligence is whether the project has performed the appropriate analysis, testing, piloting, and scoping to have a complete basis of design, and how that has been integrated into the engineering documentation. We have observed that where there is a lack of integrated pilot data, or sparse operational data, a project invariably ends up going back to do additional studies or repeat parts of the Front-End Engineering Design (FEED), or gets stuck in perpetual development, unable to move forward due to a lack of clearly defined project objective and data requirements.

Merrow provides a diagram in Figure 5 of his paper that nicely illustrates the effects of missing Basic Data.

#2: There is No Substitute for Development Work

Merrow has words of caution regarding the use of simulators and their applicability to predict the performance of different kinds of new processes. Simulators are useful for highlighting the important questions, but they are not a substitute for empirical process development work. When used properly, simulations can help narrow the range of experiments needed, and when calibrated against real world results, they can be beneficial in both the design and operation of a process. A common pitfall is trusting a model just because it seems precise and detailed, and not verifying the accuracy of modeling results against experimental lab and process data. Merrow points out an important observation: simulations are better at predicting the behavior of homogeneous materials, and are less capable if the process involves solids or heterogeneous mixtures. He concedes that examples do exist of process development leaping from bench scale to commercialization (citing up to 350,000X scale up!) with the aid of simulations, but these are the exception rather than the rule and success cases are confined to processes where the number of new steps are low and the process fluids are liquids and gases that are well studied with extensive property libraries. For most process development a pilot or a PDU (process development unit: a focused pilot where only the new steps are included) will be needed. Merrow offers these best practices for pilots and PDUs:

- All new process steps should be included.

- Any steps that have dynamic interaction with the new process steps should be included

- Any recycle streams and affected step should be included

- If the scale of the process step affects the behavior of the process (for example geometry, surface effects, flow regime, mixing etc) then you are likely to need to do a pilot at a larger scale. The essential principle here is to make sure that the equipment in the pilot or PDU adequately represents the larger-scale process.

To Merrow’s excellent points, I will add a fifth and sixth consideration based on NER’s experience.

- If you are innovating in an industry that expects meaningful sample sizes of on-spec product prior to executing an off-take contract (more common in solids processes or where your material will go into consumer products) or innovating in a nascent product market, you will need a fully integrated pilot, and it will need to be at the larger scale, which is typically referred to as a demonstration unit.

- If you have a heterogeneous feedstock that varies widely over time, then you will need a fully integrated pilot to understand variability effects on all the process steps, product quality, and yields.

In general, it rarely pays to skimp on piloting, and building a fully integrated pilot at the largest scale feasible will give the best outcomes in the commercial plant and avoid delays in the development process. In other words, do it right or be prepared to do it over.

#3: Solids are Hard (pun absolutely intended) – Plan Accordingly.

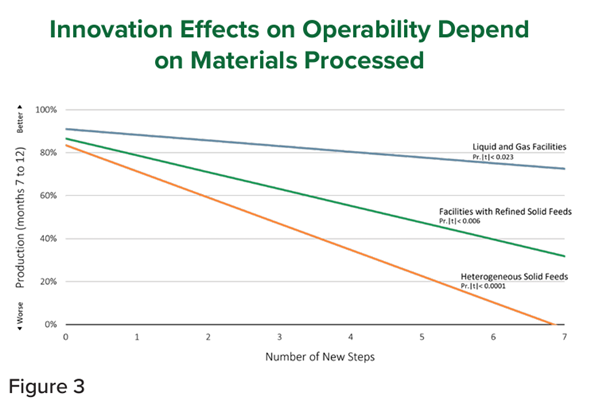

In the whitepaper Merrow, highlights the higher cost risk of new technology in the mining industry versus the chemical industry, and attributes this premium to the hard-to-model and often raw, unrefined, and heterogenous solid feedstock. In such industries Basic Data are expensive to develop and scaling is not always straightforward, so the scaling steps are naturally smaller leading to an increase in cost and time to develop, deploy, and scale innovative technology. Indeed, in Figure 3 from the whitepaper, shown below, Merrow paints the stark contrast between innovation outcomes in liquid and gas technologies versus technologies involving solids handling.

At NER, we have observed firsthand some common problems encountered during initial operations of new technology processes that involve solids handling, including modifications to solids handling process steps, the need for extra spare parts for solids steps, and even redesign due to solids carryover and plugging. The best practices here include limiting the amount of innovation you are introducing in a single project, performing integrated piloting including all solids handling steps, using equipment as close to the commercial scale and design as possible, and running pilots on real world feedstock with a wide range of realistic contaminants. In addition, it is important to recognize that even if you do all these things, you will still need additional contingency built into your start-up plan to cover inevitable solids handling issues when you are innovating in this space.

At NER we regularly see projects not planning for additional commissioning and start-up time in their financial models. This ultimately leads to unrealistic expectations from high-level stakeholders and a lack of funds to cover operating expenses or modifications when startup inevitably takes longer than advertised. This can lead to new technology companies needing to raise additional funds in the midst of commissioning just to continue operations.

Final Thoughts

In summary, this new technology focused paper from IPA gives us some very useful benchmarking tools for new technology projects and reminds us of the best practices and pitfalls associated with technology development and deployment. With the energy transition on an accelerated timeline and everyone hurrying to “deploy deploy deploy”, it is important to remember Professor Brent Flyvbjerg’s sage advice to projects from the highly quotable “How Big Things Get Done” [3]:

“Planning is relatively cheap and safe. Delivery is expensive and dangerous. Good planning boosts the odds of quick effective delivery, keeping the window of risk small and closing it as soon as possible”

Sources:

[1] Table 1, Merrow, E. W. & Independent Project Analysis, Inc. (2024, July). The Successful Management of New Technology Projects. https://www.ipaglobal.com/wp-content/uploads/2024/07/New-Technology-White-Paper.pdf

[2] Szabo, Paul. (2013). Follow this Process Development Path. Chemical Engineering Progress, December 2013, 24-27

[3] Flyvbjerg, Bent & Gardner, Dan. (2022). How Big Things Get Done: The Surprising Factors that Determine the Fate of Every Project from Home Renovations to Space Exploration, and Everything in Between. Penguin Random House 2023